Exercise 3: Bayesian non-parametric density estimation

Goal

In this exercise, you will implement an MCMC algorithm for Bayesian non-parametric density estimation. Various topics on Gibbs, conjugate models, Metropolis-Hastings and issues arising from implementation of probabilistic inference software will be touched on at the same time.

Resources for this assignment

You are provided with a scaffold for this project, containing both code and most of the detailed instructions for the main parts of the assignment. See the github page to see what you should do next in terms of implementation. We will also give more information at the labs.

Data analysis

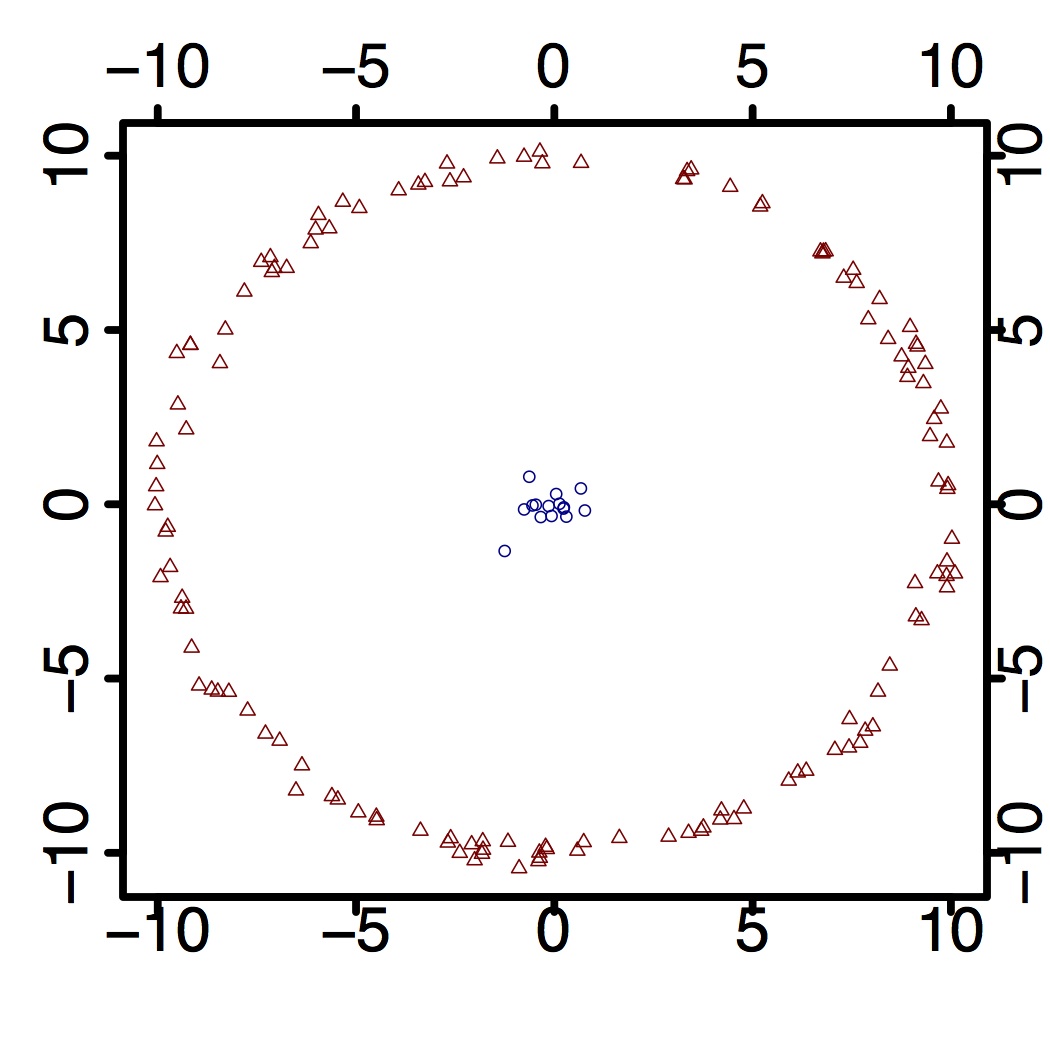

A few small datasets are provided in the repository, but they are fairly simple, synthetic and small (see right for an example).

I therefore recommend that you optionally pick a real dataset of your choice. For example, the city of Vancouver has some interesting datasets at this address, which you could use to model the density of anything from graffitis to heritage buildings. The World Bank, UCI, are also a classic source, let me know if you have other suggestions to add here.

Optional theoretical questions

These questions are optional, but will help you understand the implementation part of this exercise.

- Derive the predictive distribution of the Dirichlet process mixture (DPM) model. Make sure you formula can be computed in time linear in the number of tables.

- Fix one point $x_0 \in \Xscr$. Let $F$ denote the unknown density modelled by the DPM. What is the Bayes estimator of $F(x_0)$ for the mean squared error?

Harder optional question and project ideas

This scaffold can be a good starting point to extend for a project. Robust extensions will be offered to integrate with the scaffold to release as an open source library.

Other tests for the correctness of the Gibbs sampler

Try some other strategies for testing the correctness of the sampler, for example:

- Enumerate all the partitions explicitly on a small example to check the sampler converges to the correct posterior.

- Look at this paper: Geweke 2004. You should be able to use the code from the first assignment if you want to try this idea.

Alternative to Gibbs

A simple alternative to Gibbs which can actually be shown to dominate Gibbs is described in this classic paper, Peskun 1973. Try the idea in the CRP case to see if it makes the sampling more computationally efficient.

More!

I have many other suggestions, some of which I will cover in class, some of which I can tell you in person. Thing related to:

- High-$p$ regime: Rank one update and beyond.

- Other likelihoods, classic methods for non-conjugate cases; new, discretized methods for non-conjugate cases

- Objective comparison and combination of existing samplers: stick-breaking (truncated and auxiliary variable-based), collapsed.

- New samplers/moves (symmetrized, stick permutation, ...)

Handing-in

Simply submit one zip file containing the whole git repository, called xxxxxx-ex3-q1.zip and one pdf with a short report of what you did, xxxxxx-ex3-q2.pdf. Here xxxxxx should be replaced by your student number.

Hints

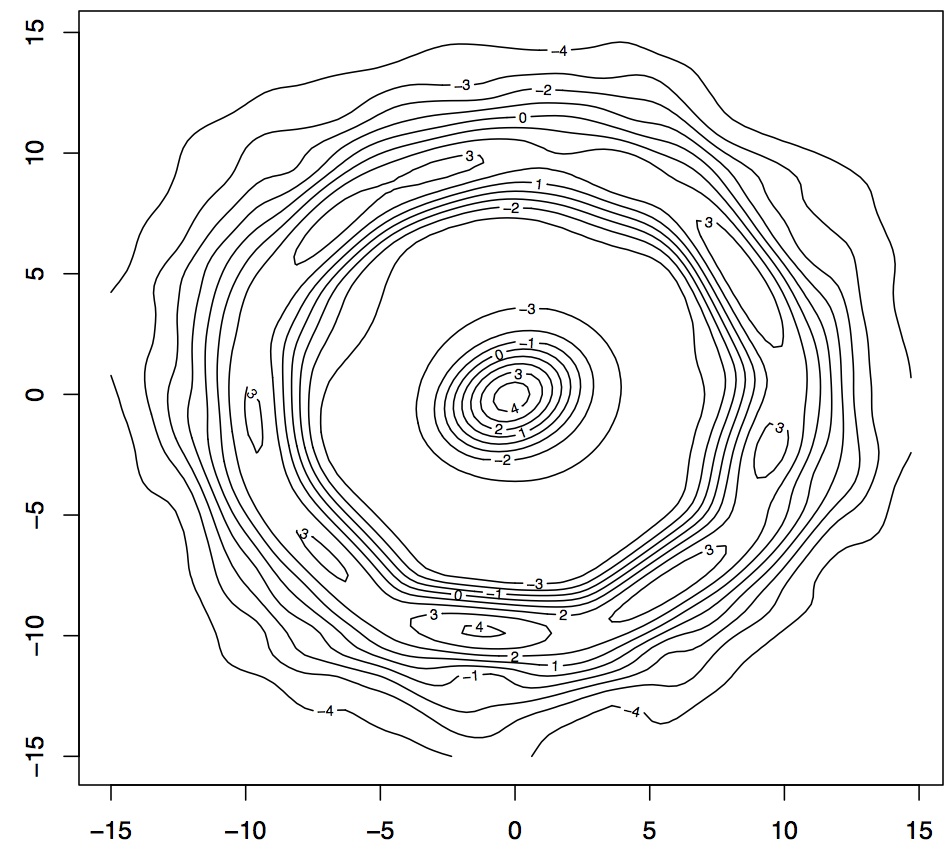

You should get something of that form for the predictive distribution: